官网示例:

Create a Business Class

Person.java:

|

|

Create an Intermediate Processor

A common paradigm in batch processing is to ingest data, transform it, and then pipe it out somewhere else. Here, you need to write a simple transformer that converts the names to uppercase.

PersonItemProcessor.java:

|

|

PersonItemProcessor implements Spring Batch’s ItemProcessor interface. This makes it easy to wire the code into a batch job that you will define later in this guide. According to the interface, you receive an incoming Person object, after which you transform it to an upper-cased Person.

The input and output types need not be the same. In fact, after one source of data is read, sometimes the application’s data flow needs a different data type.

Put Together a Batch Job

Now you need to put together the actual batch job. Spring Batch provides many utility classes that reduce the need to write custom code. Instead, you can focus on the business logic.

To configure your job, you must first create a Spring @Configuration class like the following example in BatchConfiguration.java:

|

|

For starters, the @EnableBatchProcessing annotation adds many critical beans that support jobs and save you a lot of leg work. This example uses a memory-based database (provided by @EnableBatchProcessing), meaning that, when it is done, the data is gone. It also autowires a couple factories needed further below. Now add the following beans to your BatchConfiguration class to define a reader, a processor, and a writer:

|

|

The first chunk of code defines the input, processor, and output.

reader()creates anItemReader. It looks for a file calledsample-data.csvand parses each line item with enough information to turn it into aPerson.processor()creates an instance of thePersonItemProcessorthat you defined earlier, meant to convert the data to upper case.writer(DataSource)creates anItemWriter. This one is aimed at a JDBC destination and automatically gets a copy of the dataSource created by@EnableBatchProcessing. It includes the SQL statement needed to insert a singlePerson, driven by Java bean properties.

The last chunk shows the actual job configuration:

|

|

The first method defines the job, and the second one defines a single step. Jobs are built from steps, where each step can involve a reader, a processor, and a writer.

In this job definition, you need an incrementer, because jobs use a database to maintain execution state. You then list each step, (though this job has only one step). The job ends, and the Java API produces a perfectly configured job.

In the step definition, you define how much data to write at a time. In this case, it writes up to ten records at a time. Next, you configure the reader, processor, and writer by using the beans injected earlier.

chunk() is prefixed <Person,Person> because it is a generic method. This represents the input and output types of each “chunk” of processing and lines up with ItemReader<Person> and ItemWriter<Person>.

The last bit of batch configuration is a way to get notified when the job completes.

|

|

The JobCompletionNotificationListener listens for when a job is BatchStatus.COMPLETED and then uses JdbcTemplate to inspect the results.

Make the Application Executable

Although batch processing can be embedded in web apps and WAR files, the simpler approach demonstrated below creates a standalone application. You package everything in a single, executable JAR file, driven by a good old Java main() method.

The Spring Initializr created an application class for you. For this simple example, it works without further modification.

|

|

@SpringBootApplication is a convenience annotation that adds all of the following:

@Configuration: Tags the class as a source of bean definitions for the application context.@EnableAutoConfiguration: Tells Spring Boot to start adding beans based on classpath settings, other beans, and various property settings. For example, ifspring-webmvcis on the classpath, this annotation flags the application as a web application and activates key behaviors, such as setting up aDispatcherServlet.@ComponentScan: Tells Spring to look for other components, configurations, and services in thecom/examplepackage, letting it find the controllers.

The main() method uses Spring Boot’s SpringApplication.run() method to launch an application. Did you notice that there was not a single line of XML? There is no web.xml file, either. This web application is 100% pure Java and you did not have to deal with configuring any plumbing or infrastructure.

Note that SpringApplication.exit() and System.exit() ensure that the JVM exits upon job completion. See the Application Exit section in Spring Boot Reference documentation for more details.

For demonstration purposes, there is code to create a JdbcTemplate, query the database, and print out the names of people the batch job inserts.

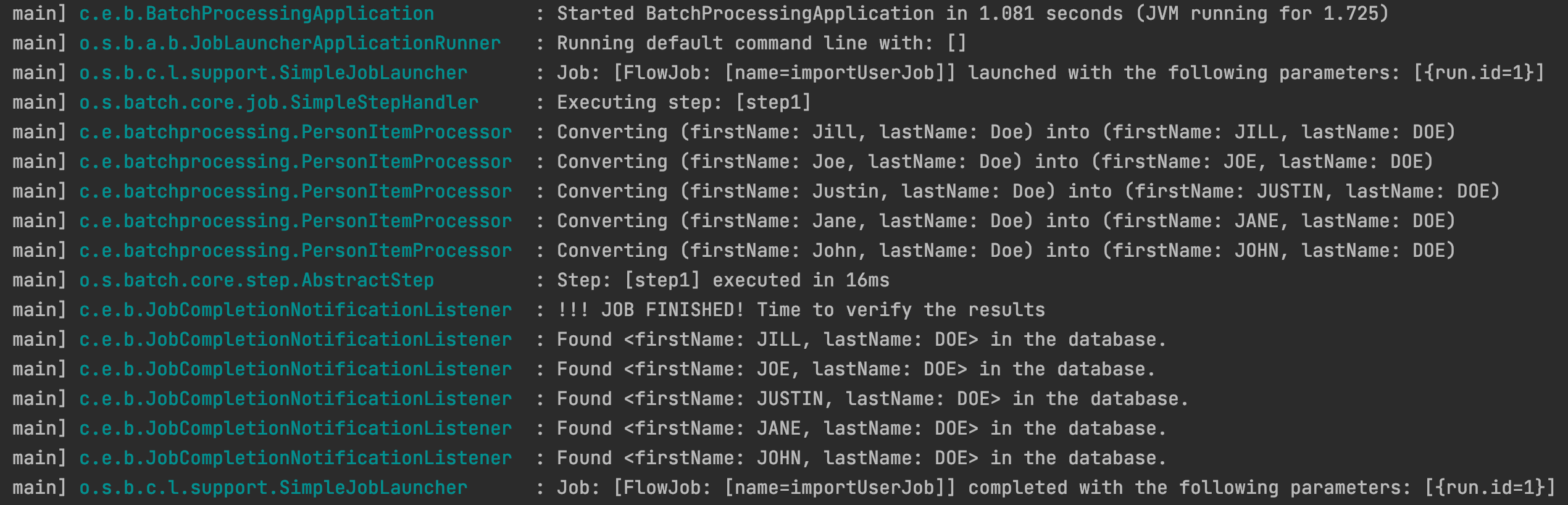

运行结果:

Summary

Congratulations! You built a batch job that ingested data from a spreadsheet, processed it, and wrote it to a database.